Artificial Intelligence (AI) is one of the most widely discussed topics in education today. While some AI-related topics and tools are new to the field, the underlying principles and practices that apply to any emerging technology in education have been developed over decades. The current interest in AI and a growing demand for AI literacy have opened up opportunities for equitable, evidence-based, innovative solutions in digital skills education, teacher professional development, and strategic education technology (edtech) implementation.

A few months ago, at the UNESCO Digital Learning Week (DLW), we talked with digital learning experts about AI and its impact on learning, teaching, and education systems. The event focused on how public digital learning platforms and generative AI (GenAI) tools can support equitable, inclusive, human-centered learning opportunities and enhance learning outcomes. We used this opportunity to share insights from our AI for Learning and Work initiative and gather new ideas.

Based on our work to advance digital equity and discussions at Digital Learning Week, we propose “the three Es” for education providers and practitioners to consider when seeking to develop or make use of AI for Learning strategies and tools: equity, ethics, and educators.

Equity

The conversation around AI and its use, specifically GenAI, has introduced a pronounced tension between closing and widening the digital divide. AI has the potential to foster equity and inclusion by providing personalized learning solutions and/or additional support to individuals who have been excluded from learning and work opportunities. However, research on edtech integration and digital learning shows inequities in access, especially during the COVID-19 pandemic. Additionally, the development and deployment of GenAI systems come with significant costs. There are fears that advances in AI might widen the digital divide, enabling more effective and efficient learning only for those with the right resources and access.

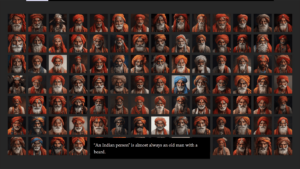

There is also growing concern around data and methods used to train GenAI models. Bias in training data and protocols can introduce new forms of discrimination, which intersect with several uncharted ethical issues on the use of AI, such as reinforcing social inequalities through automated decision-making systems or amplifying misinformation. A possible solution would be to further diversify the data that underlies these AI models, but even that solution exacerbates existing data privacy and sovereignty concerns.

Call to Action: Advocate for policies and initiatives that address the digital divide and promote equal access to AI-powered solutions. Encourage efforts to investigate the impact of AI and edtech integration on educational equity. Establish mechanisms for monitoring and reporting exclusion, bias, and/or discrimination by AI applications in education and advocate for transparency and diversity in the data and methods used to train GenAI. (See the Office of EdTech’s report on AI and the Future of Teaching and Learning, UNESCO’s Guidance for generative AI in education and research, and TeachAI’s AI Guidance for Schools Toolkit)

Ethics

At Digital Learning Week, attendees debated the potential dangers of AI systems, such as bias, discrimination, misinformation, power concentration, and other unexpected negative social impacts. While GenAI becomes increasingly sophisticated, there are concerns about its potential to undermine human agency and put human intellectual development at risk. Stuart Russell, a computer scientist and AI expert, shared during the DLW plenary: “ChatGPT is designed to pose as a person, it is not designed to facilitate teaching and learning. It is designed to give answers. We need to watch the dosage of ChatGPT and other GenAI tools in instruction and evaluate them before providing guidance. We can do damage to human cognition if we use these tools before we know the implications.”

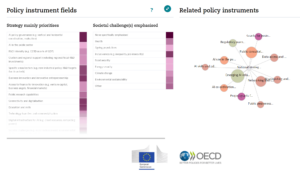

The OECD maintains a live repository of AI policy information

ChatGPT reached over 100 million monthly active users in January 2023, yet according to a survey done by UNESCO, only one country had released regulations on GenAI as of July 2023. Many Digital Learning Week participants voiced concerns that AI system design and governance are being left to technology providers with private interests. Educators and education providers are making decisions about which vendors to use without guidance, which can be risky because they may not have the expertise to thoroughly evaluate the ethical implications and data security. This can lead to adopting AI technologies that are not pedagogically effective and/or do not adequately protect user privacy and safety.

Call to Action: Align the use of AI to authentic educational needs and contexts. Be transparent about ways AI has been used in your work, especially in decision-making. Give learners voice and choice in how they leverage AI for learning. Evaluate all digital tools thoroughly and critically before adopting them. (See Workforce EdTech for a comprehensive list of evaluation criteria.)

Educators

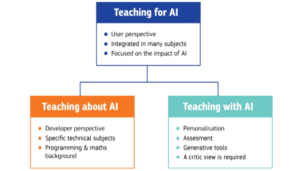

Teachers and other education professionals need technical skills, knowledge, and strategies to use AI tools safely and responsibly and support the AI literacy development of their students. Following the three main categories introduced by the European Digital Education Hub’s (EDEH) Use Scenarios & Practical Examples of AI Use in Education, we’ve laid out key insights and considerations below:

A diagram of AIED teaching categories from EDEH’s report

Teach for AI: cultivating an underlying AI literacy to use AI safely and effectively

Because AI has quickly entered everyday life in new ways, everyone needs to develop foundational competencies to become more discerning, empowered users of AI tools, especially as these become increasingly embedded in digital environments. Teachers need adequate preparation so they can “teach for AI use in everyday life.” Teachers and learners must understand how AI systems are used in society so they can approach these systems with the necessary skills and knowledge around information literacy, privacy, and digital citizenship.

Teach about AI: developing technical skills and preparing for the future of work

Julien Simon, Chief Evangelist Officer from Hugging Face, summarized this point during the DLW plenary session “Generative AI and Education”:

The toothpaste is out of the tube, and it’s not going back! We need a technical understanding of AI to rise: no matter if you are a doctor, farmer, or factory worker, you will be working with AI. You need to understand the limitations and challenges of AI, as well as understanding that we still need humans to keep AI in check.”

Throughout Digital Learning Week, many participants shared their experiences integrating AI literacy into their curricula and their challenges with teacher training and lack of standards and vetted resources. Because AI advancements will shape the future of work, we must provide educators with the resources to prepare learners for the technical aspects of understanding, using, and developing AI.

Teach with AI: using AI-enabled tools for teaching and learning

In addition to equipping teachers and learners to navigate and understand AI, we must think about how AI is used in classrooms and programs. Teachers need to know how to evaluate, select, and apply AI-enabled tools with learners, using many of the same principles applied in all strategic edtech integration with the added layer of awareness and critical thinking that using AI requires.

Call to Action: Engage teachers and learners in critical conversations about how AI already impacts them on a day-to-day basis. Adopt and adapt open educational resources that teach AI. Develop training plans that support educators in all roles to develop AI literacy. Integrate AI literacy as an extension of existing digital literacy and resilience efforts. (See BRIDGES Digital Skills Framework, which has “I can” statements aligned to AI for learners in a range of settings.)

As you develop your AI for Learning strategy, we encourage you to put equity, ethics, and educators at the center. Equipping teachers and educating learners is only part of the process. Equity and ethics must be applied to policy-making, research, and educational leadership to ensure AI enhances learning for all.